Translations: "Spanish" |

Home Lab Part 4: Shrinking/Consolidating Home Lab

And before getting into the server upgrade details, I started to consolidate and optimize the space usage of my home lab; all the devices I had in the rack were short-deep appliances, between 9" deep and some of them even less. While researching, I found an interesting fact, pro audio equipment also uses the 1U format, and most of them are short-deep too.

There are many 1U portable cases in the market. Long story short I ended moving from the heavy wall mount 12U rack to this case:

Roto-Molded 6U Shallow Rack 1SKB-R6S (https://www.skbcases.com/music/products/proddetail.php?f=&id=792&o=&c=114&s=)

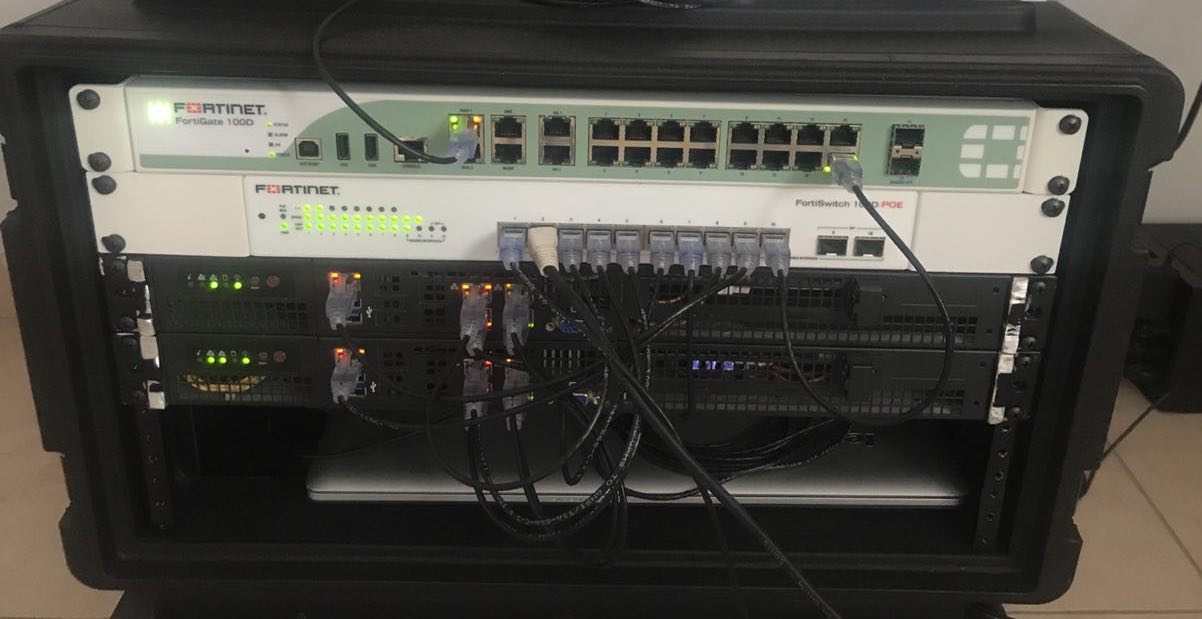

And this is a picture I found in my records:

I gave the Fortiswitch units to other coworkers, and keep an FG 100D and a FortiSwitch with POE for power the FortiAPs I had in the lab. I also improved the cable management :), those are the Monoprice SlimRun Cat6A Ethernet Patch Cables, and I got many of them in different sizes from here: https://www.amazon.com/gp/product/B01BGV2C7U/.

Let's go back to the server upgrade. Back in February of 2018, Intel announced the next generation of Xeon-D processors "architected to address the needs of edge applications and other data center or network applications constrained by space and power." Source: https://newsroom.intel.com/news/intel-xeon-d-2100-extends-intelligence-edge-enabling-new-capabilities-cloud-network-service-providers/

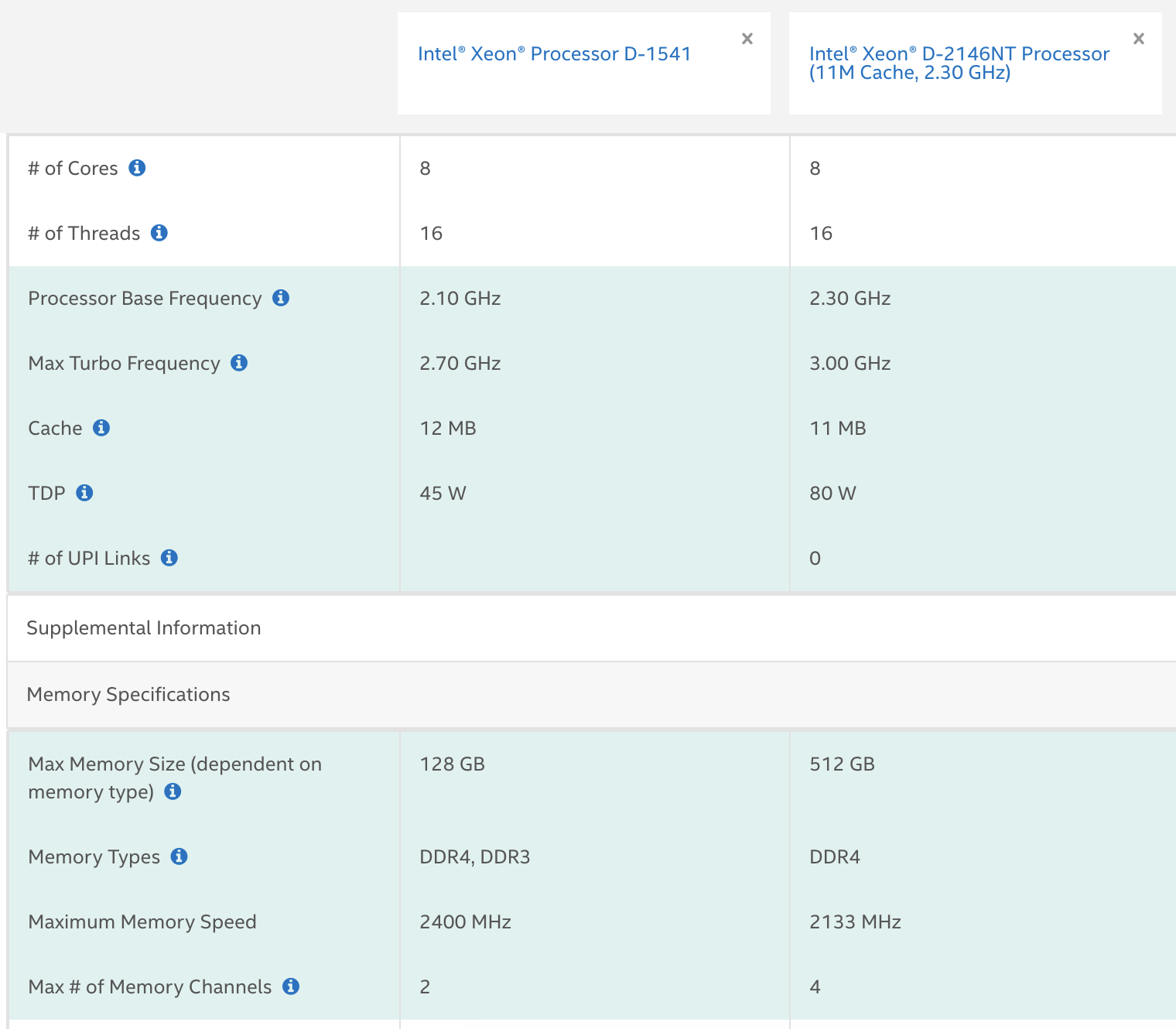

And to have an idea, a comparison with the CPU I had in the two servers I had can be found here:

From that chart, I can highlight slightly more performance from 2.1 GHz to 2.3 GHz, but at least twice TDP, which means that it needs more cooling to work correctly. The CPU power didn't have much weight on my decision criteria. However, the amount of memory channels is twice between the 1500 and 2100 series, which means that the 2100 series can support up to 512GB of RAM versus 128 GB, which was a more compelling factor in making an upgrade decision.

Back in June 2018, Supermicro launched the SuperServer SYS-E300-9D using the Xeon-D 2100 series, a small form factor micro-server that maintains the 1U height but not the width so it would need an extra rack mounting kit or use a rack tray and an external power adapter. Then in November 2018, Supermicro launched two more models, the SYS-E300-9D-4CN8TP and the SYS-E300-9D-8CN8TP. After reading the technical specifications, I was more inclined to the 8CN8TP option.

With that upgrade, I planned to shrink my setup from two 1U servers with 128GB of RAM each one, totaling 256GB of RAM into a single system in an even smaller format as the size is about 3/4 the width of my previous servers. Another benefit would be less power consumption. One caveat I found while researching was some reports that it was noticeably louder compared with the Xeon-D 1500, which didn't worry me as I already addressed that issue using Noctua Fans.

So I put the two Xeon-D 1500 servers on sale, and prepared a new BOM:

- 1 x SYS-E300-9D-8CN8TP

- 1 x RSC-RR1U-E8 1U Riser Card

- 1 x Supermicro AOC-SLG3-2M2 PCIe Add-On Card for up to Two NVMe SSDs

- 4 x Supermicro Certified MEM-DR464L-SL01-LR26 64GB DDR4-2666 LP ECC LRDIMM

- 1 x Supermicro 64GB 520 MB/s Solid State Drive (SSD-DM064-SMCMVN1)

- 2 x Samsung 2TB 970 EVO NVMe M.2 Internal SSD

While planning the upgrade, I started to look into other optimizations and found this blog where David Chung shared his experience building an "SDDC in a Box", the blog posts can be found here: Part 1 (https://vcloudone.com/2018/10/sddc-in-a-box-part-1-bom) and Part 2 (https://vcloudone.com/2018/10/sddc-in-a-box-part-2-decision-making-process) where he also used an SKB Rack but the 4U size. His use case is slightly different as he packed four nodes to demo SDDC features, which is great. I wanted to use one idea from his setup because he takes care of the airflow inside the rack using a set of 120mm Noctua Fans; however, I don't have the skills to build the 3U fan panel. Thankfully, I found a website that sells panels that hold different Fan sizes, along with other very interesting solutions like fan controllers.

I ordered this panel bundles:

And I wanted to add another panel with 80mm fans as an exhaust:

I sent an email asking if I could order the panels and other accessories of the bundle without the included fans as I would order Noctua fans to use them in this part of the setup. They said yes, so I made the purchase.

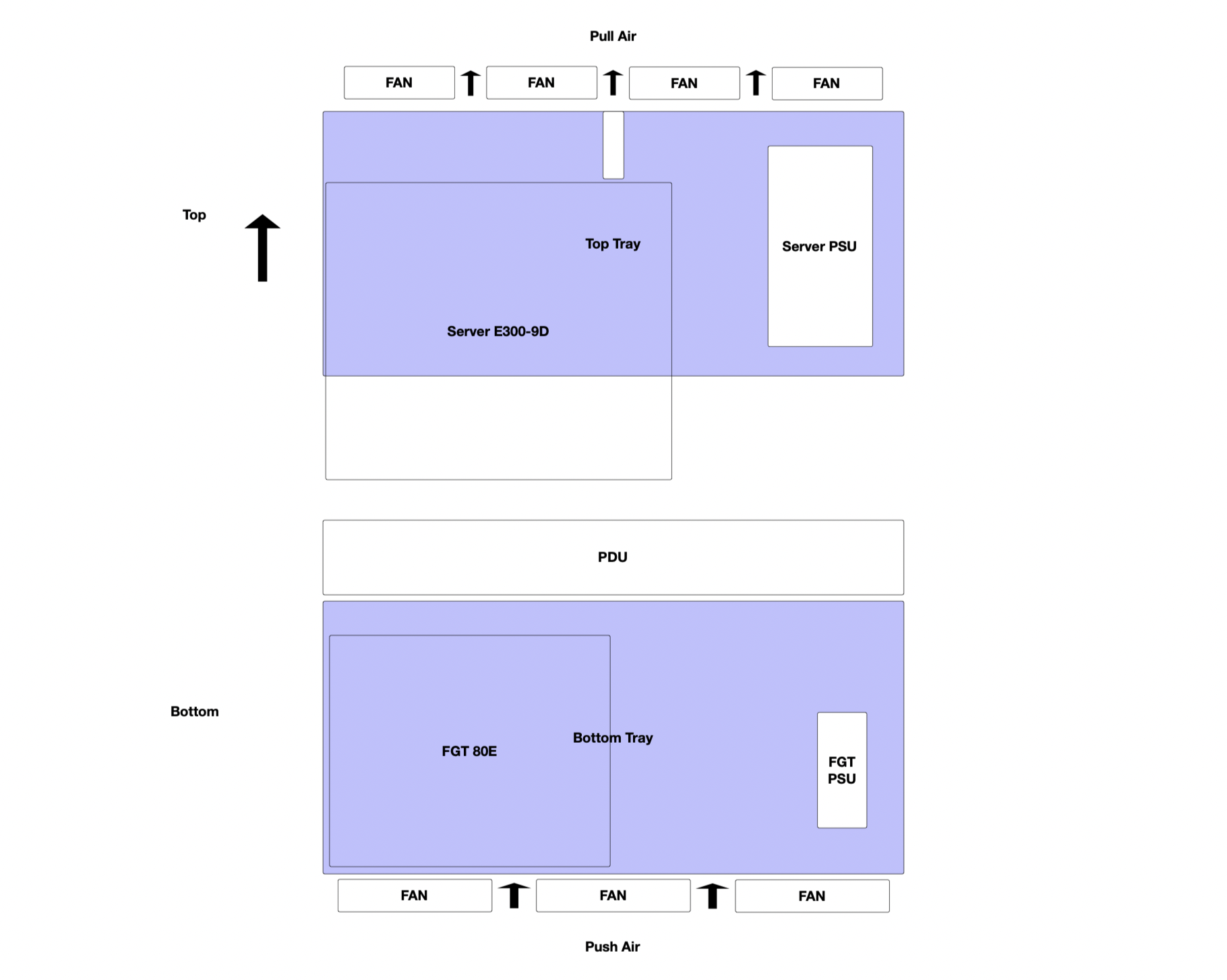

As there was no stack of switches needed, I moved from a FortiGate 100D to a FortiGate 80E, which is fanless (0 dB of noise), so the whole setup ended on the server, the FortiGate 80E, and two FortiAP to provide wireless access.

This is a drawing I made back at the time while planning the insides of this mini datacenter idea:

An small explanation:

For push air, I used the 3u panel with 3 x NF-S12A FLX which are 120mm Fans specially designed for cases with their improved airflow and pressure efficiency.

I found this picture from the pull panel:

This is the complete setup where I also ended with a 4U Rack and using only 2U for devices, 1U for the PDU, and a free 1U space for airflow:

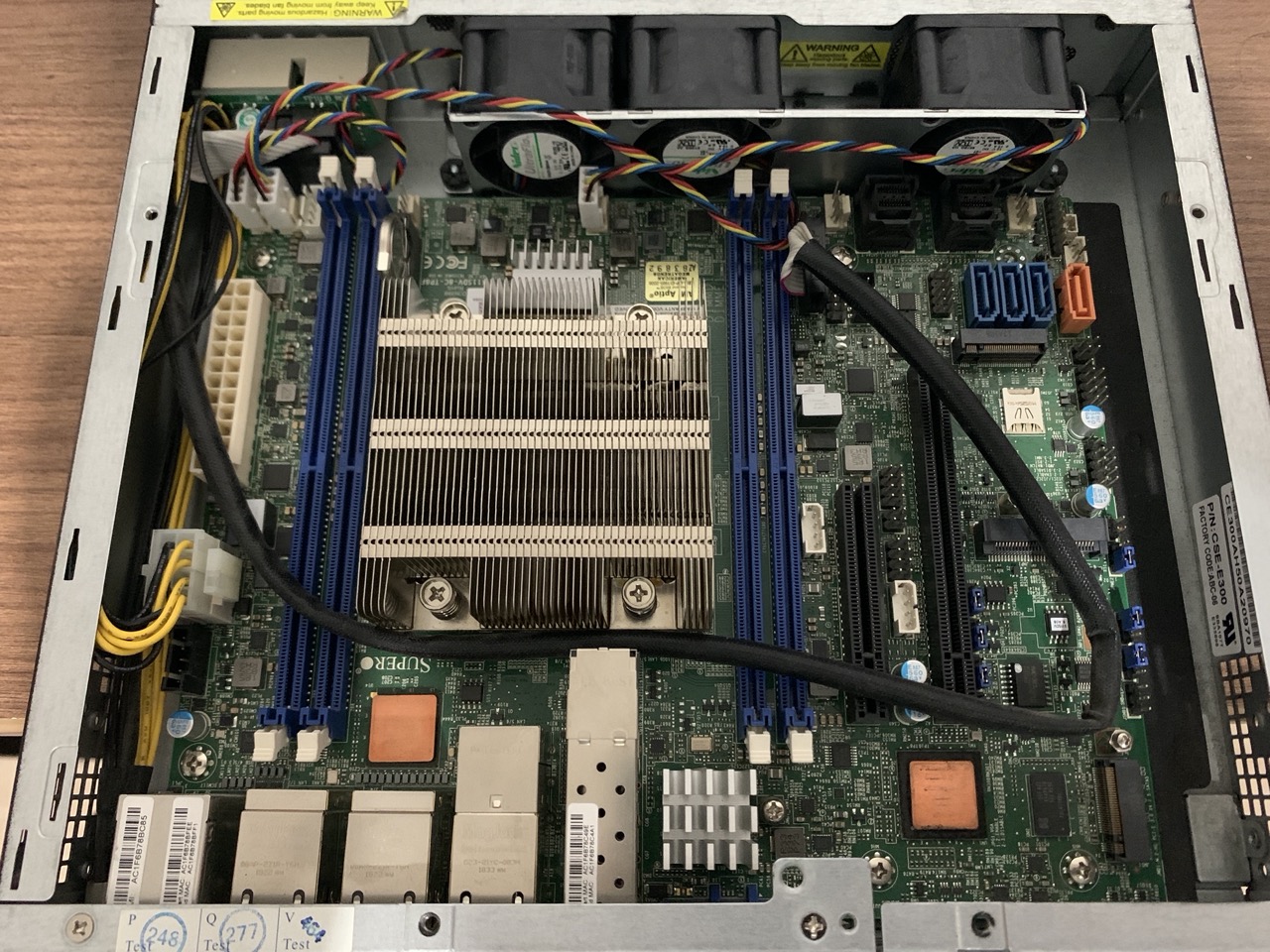

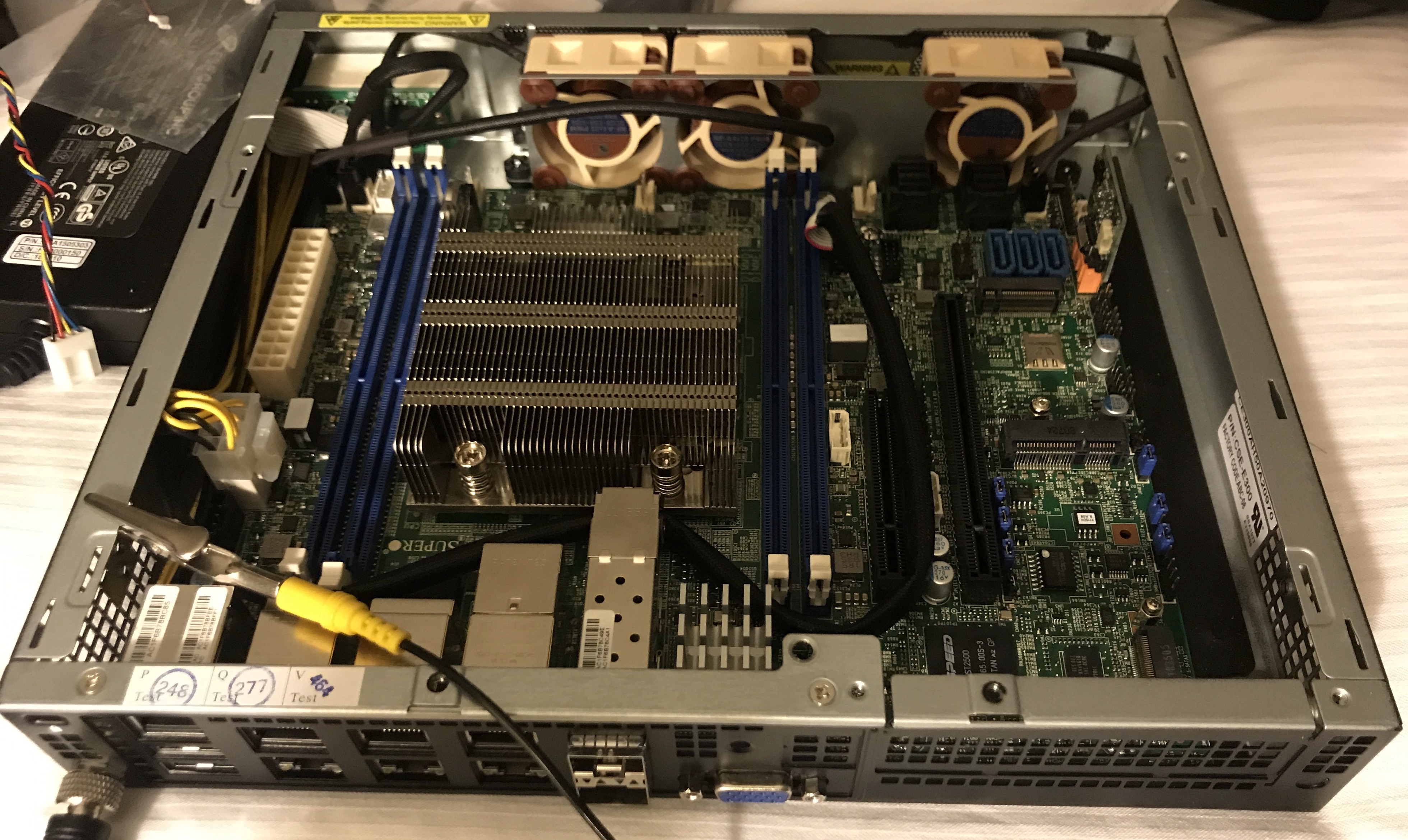

A small detail for the inside cooling of the server, this is a picture of the passive CPU cooler:

And this after replacing the stock fans with a set of 3 x Noctua NF-A4x20:

All went fine until I started to put some serious load into the system, there was 256GB to use, why not stress this little box with some crazy deployments?. And that's where I found that even with the case fans, the CPU of the server began to throttle. After research a little bit, I discovered that the Noctua fans' airflow pressure inside the server wasn't enough. A quick workaround was to put all my VMs inside a vApp and then limit the CPU from 20 GHz to 17 GHz. I kept using this workaround for my tests for a while, but I was not happy with the workaround, and go back to the stock fans was not an option.

Spoiler alert, I shrank the setup even more, and ended with a Mini ITX system bringing together two worlds: "/r/sffpc/" and "r/homelab/". This seems to be the first record of an AMD Epyc 3251 with 256GB of RAM and NVMe storage inside a 12.7L case, such as the Ncase M1. This "ultimate" build deserves their own post, I promise I won't take that long to publish.

You can find my previous posts using this links:

- Home Lab Part 1: Why build one?

- Home Lab Part 2: Skills and resources before build one

- Home Lab Part 3: Buildinf the first version of my Home Lab